Google’s Complicated History With JavaScript

JavaScript has long been one of the SEO’s greatest enemies – you can do a lot of cool things with JavaScript, especially with the introduction of new frameworks like AngularJS; SEO though has historically been a challenge for Google. Rendering content or links that were served in JavaScript has been one of Googlebot’s biggest historical challenges. This meant that if you primarily used JavaScript to display the important content on a page – such as information and descriptions in a tabbed product page – you might as well have an empty page.

Google has made several announcements about how they are better at indexing JavaScript content. Please remember, better is from a very low baseline. From testing, we were able to figure out that initially this meant that Google could understand content when all of the JS was included inline. Then they announced the escaped fragment – which essentially meant: Hey Googlebot, look over here to see what the content looks like when you see this indicator because you won’t be able to fully render this content.

Today we are at a point where JavaScript frameworks, such as AngularJS, are becoming much more powerful and commonplace. Realizing that their escaped fragments solution is not well suited for the growing popularity of Angular and other JavaScript frameworks Google has investigated significantly in indexing JavaScript, Google announced in 2015 that they can crawl JavaScript just fine as long as it’s not blocked by robots.txt:

Today, as long as you’re not blocking Googlebot from crawling your JavaScript or CSS files, we are generally able to render and understand your web pages like modern browsers. To reflect this improvement, we recently updated our technical Webmaster Guidelines to recommend against disallowing Googlebot from crawling your site’s CSS or JS files.Basically: don’t worry about the SEO, leave that to us. You just focus on making good content.

Can Google Really Crawl JavaScript?

What Google can actually crawl vs what they claim is up for debate. Based on my experience, here is what I’ve seen Google be able to crawl and read:

Content in Initial Page Load

Generally Google is a lot better at reading JavaScript than it’s ever been. It seems like they are able to typically index content rendered in JavaScript that is visible upon page load to users. That said, Google does not seem to be able to index any content that requires a click. So if you are concerned about your AngularJS SEO, you should make sure that all content which you want to get indexed is visible on page load. This means any content requiring a click, such as an expanding container, contented in a tabbed experience, etc will likely not be indexable by Google.

Links in JavaScript

Typically, it seems like Google is able to discover links in JavaScript such as “onClick”,“javascript:openlink()”, and “javascript:window.location“. While I’ve seen Google crawling these URLs, it is important to note that URL discovery is much different than passing equity (PageRank) through URLs. I have yet to see Google passing equity through these JavaScript based links at this time.

JavaScript Redirects

Google is able to follow JavaScript redirects. I tested this out a few years ago and found that if page A used a JS redirect to point to page B, page A would drop out of the SERPs and would be replaced by page B. Generally, this one is pretty easy for Google to figure out. My tests were around non-competitive terms so I can’t say whether Google is treating it more like a 301 or 302 in terms of the equity passed – From my experience, 301’s tend to pass significantly more equity than 302’s despite Google’s vague claims that it doesn’t matter which redirect you use.

Wait Times

It is worth noting that JavaScript based pages take much longer for a bot to load and render than your standard HTML page as this is so much more resource intensive. In most cases, Google will wait about 4 seconds before they skip over your URL and move on. If it takes your page longer than this to load, you’re hosed. Screaming Frog is a great tool for testing this as you can set a custom wait time.

How to Render AngularJS Like Googlebot

Historically SEO’s looked at the source code of a page to determine if content on a page was indexable. If it’s in the source code, then you should be fine. With sites that rely heavily on AngularJS, oftentimes, much of the content will not actually be in the source code. Given Google’s improvements in AngularJS indexation, this means that you can no longer rely on the source code to evaluate the AngularJS indexing performance of a site. If you want to see if content is indexable, you can load the page and then right click and select inspect element BEFORE clicking on anything on the page. This will typically be the same content and sourcecode that Google is able to render.

Why None of This Matters

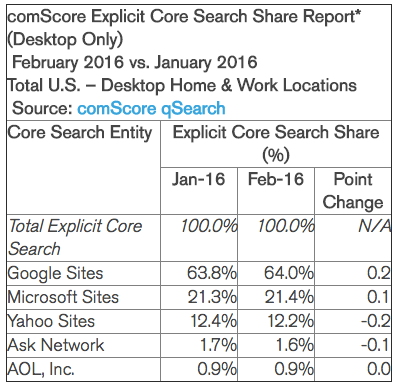

You may have notice how up until this point we have talked exclusively about Google. While Google commands the majority of the market share in the US, there are still other significant players. In the US, Bing is most notable with an alleged third of the market share (Microsoft properties + Yahoo search). If you operate in Russia, Yandex has 60% of the market. Similarly in China, Baidu holds about 80% of the market share.

Google is ridiculously better at rendering JavaScript frameworks than any of their competitors. While Google may be able to render your Angular page, Bing is going to choke up.

While Comscore claims that Bing powers a third of searches in the US, most sites I’ve seen range between 5% and 15% depending on the industry. It is unacceptable to forfeit even 5% of your traffic because you’ve decided to use Angular. You need a solution to ensure that your SEO performance does not decrease simply because you are rendering content in Angular.

The Key to AngularJS SEO

The Snapshot

The key to Angular SEO is prerendering – or creating an HTML snapshot to be served in the source code rather than relying on Google and other search engines to be able to properly render your Angular webpages. This will solve the issue of Google not being able to index content that is “locked” behind a click and will allow Google to fully index AngularJS content on your site.. Further, this will enable other search engines (Bing, Yandex, Baidu) to see any content on your page. When you are prerendering your pages, you should be able to look directly in the source code and see your content rather than needing to use the inspect element functionality of Chrome.

URL Structure

While pre-rendering your content is critical, another important component of AngularJS SEO is making sure that your pages have a user (and search engine!) friendly URL. This means avoid the hash garbage in your URLs (ex: site.com/#/page) – which is one of the options for rendering URLs in Angular. To setup pretty / SEO friendly URLs, you will want to leverage the $routeProvider

and $locationProvider to set your routing to HTML5 mode.

Resources:

XML Sitemaps

As a follow up to URLs, make sure that you are generating XML sitemaps that include your canonical URLs and that they are all submitted in Google Search Console (and Bing Webmaster Tools). This will help ensure that search engines can find and crawl all of your important URLs.

How to Pre-render Your Pages for Angular SEO

The easiest way to prerender / create a server side render of your client side render application is to use middleware. The best software solution I’ve found for this is prerender.io, which crawls the site and creates a server side render version of your pages that gets served to bots.

If you want to configure Angular to deliver a pre-rendered version of your AngularJS pages for SEO rather than using middleware, you want to make sure you are using Angular2 (or newer) rather than the initial version of AngularJS. This is because, like React, Angular2 supports server side rendering. This will allow you to easily turn on prerendering to create HTML snapshots for SEO.

There are several guides to setting up Angular Universal / server side rendering to create your HTML snapshot:

If for whatever reason you don’t want to use Angular2 / Universal, you will need to roll your own pre-rendering through something like phantom.js. If you want to use a service to do most of the heavy lifting for you, you can use something like prerender.io, but you’ll still need dev support to integrate with your platform.

Thanks, really appreciate this. We weren’t server side rendering, this should really help us improve our SEO performance.